Terraformed Odyssey: From Code to Day Two Operation & Beyond

They say to master a new technology, you will have to play with it. While learning a new technology, I always write down the questions that pops up in my mind. And document it while trying to find answers. You can access my study notes at notes.sreboy.com.

These series of articles will be a refined version of my notes. I will try to cover the most important concepts and best practices I learned from documentations, exploring source code on github, github issues threads, other articles, youtube videos, and most importantly, through hands-on experimentation and embracing the creative chaos of exploration, much like assembling LEGO blocks, where I constantly experiment and combine different elements to learn.

In this article we will deploy using Terraform as much as possible, and with the minimum ClickOps required:

- EKS cluster using terraform resources (No Modules).

- Kube Prometheus Stack with Loki (Helm).

- Two Ingress Nginx Controllers (Internal and External).

- Basic Auth for Ingress. (Soon External auth using Keycloak with Terraform).

- Configure Route53 with Split Horizon DNS.

- Install Cert-Manager and configure it to automate the dns-01 challenge.

- Restrict access to Route53 records by using IRSA.

- Utilize sealed-secrets to store sensitive data in git. And Integrate it with kustomize.

- Configure AWS Client VPN and AWS IAM Identity Center with:

- SSO: SAML based Federated Authentication.

- Active Directory Authentication.

- Deploy ArgoCD with app-of-apps pattern. And then Deploy:

- Send ArgoCD and Alert Manager Notifications to Slack.

- Utilize kustomize to:

- Deploy custom dashboards to Grafana.

- Handle Sealed Secrets and ConfigMaps.

Besides that we will discuss:

- How to build multi architecture Docker images.

- OpenVPN over Shadowsocks to bypass Deep Packet Inspection.

- Split Horizon DNS.

We will go through many concepts with some advanced configurations but at a quick pace. Because it is a From Code to Day Two Operation article at the end of the day. However, in the coming articles we will take a deep dive into each concept e.g.:

- Docker Engine: namespaces, cgroups, pivot_root, etc.

- Provision and Monitor a Highly Available Kubernetes Cluster from Scratch. E.g. monitor Certificates expiration dates. Besides, applying best practices to secure the cluster.

- Maintaining a HA etcd cluster in production.

- Many more...

Before we start you can access the code at:

GoViolin

This app is written in Go. It doesn't have any database or storage dependencies. Just a simple webapp that serves a static content.

Run Locally

- Method One

- Method Two

- Method Three

go run $(ls -1 *.go | grep -v _test.go)

go run main.go home.go scale.go duet.go

go build -o main

./main

Dockerfile

We aim for our docker image to be as minimal as possible. So we will use multi-stage builds to achieve this. Also, supporting amd64 and arm64 architectures is a MUST for our app. Check REFERENCES section for useful resources.

In summary, we aim for a minimal multi-stage and multi-platform Docker image:

FROM --platform=$BUILDPLATFORM golang:1.21.5 AS builder

WORKDIR /app

COPY go.mod go.sum /app/

RUN go mod download

COPY . .

ARG TARGETOS TARGETARCH

RUN CGO_ENABLED=0 GOOS=${TARGETOS} GOARCH=${TARGETARCH} go build -o main

FROM --platform=$TARGETPLATFORM scratch

WORKDIR /app

COPY --from=builder /app/ /app/

EXPOSE 8080

LABEL org.opencontainers.image.tags="ziadmmh/goviolin:v0.0.1,ziadmmh/goviolin:latest"

LABEL org.opencontainers.image.authors="ziadmansour.4.9.2000@gmail.com"

CMD ["/app/main"]

Please do NOT forget the CGO_ENABLED=0 flag. Or you will face a weird error, that is hard to bug. Enjoy this good read after you finish :)

The term multi-platform image refers to a bundle of images for multiple different architectures. Out of the box, the default builder for Docker Desktop doesn't support building multi-platform images.

Enabling the containerd image store lets you build multi-platform images and load them to your local image store.

The containerd image store is NOT enabled by default. To enable the feature for Docker Desktop:

- Navigate to Settings in Docker Desktop.

- In the General tab, check Use containerd for pulling and storing images.

- Select Apply & Restart.

To disable the containerd image store, clear the Use containerd for pulling and storing images checkbox. Please, do refer to the docs first.

docker info -f '{{ .DriverStatus }}'

[[driver-type io.containerd.snapshotter.v1]]

Build Image

- Containerd Image Store

- Docker Buildx

docker build --platform linux/arm64,linux/amd64 --progress plain -t ziadmmh/goviolin:v0.0.1 --push .

docker buildx build --platform linux/arm64,linux/amd64 --progress plain -t ziadmmh/goviolin:v0.0.1 --push .

GitHub Actions

This is a dummy GitHub Actions workflow that builds, extracts the image labels from Dockerfile, and then pushes the image to Docker Hub GoViolin Repository.

It is a better idea to use the docker meta data action. To extract the image tags e.g. when you push a new tag to the repository or commit hash.

Click to expand

name: Test, Build, and Push Multi-Arch Image

on:

push:

branches:

- master

workflow_dispatch:

env:

TAGS:

TAG_VERSION:

REPOSITORY:

BRANCH_NAME:

permissions: write-all

jobs:

test-and-build:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- name: Set up QEMU

uses: docker/setup-qemu-action@v3

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@v3

- name: Run Go Tests

run: go test ./...

- name: Login to Docker Hub

uses: docker/login-action@v3

with:

username: ${{ secrets.DOCKERHUB_USERNAME }}

password: ${{ secrets.DOCKERHUB_TOKEN }}

- name: Extract metadata from Dockerfile

run: |

echo "TAGS=$(awk '/^LABEL org.opencontainers.image.tags/{gsub(/"/,"",$2); gsub(".*=",""); print }' Dockerfile)" >> $GITHUB_ENV

echo "TAG_VERSION=$(echo $TAGS | cut -d: -f2)" >> $GITHUB_ENV

- name: Check if TAGS is set

run: |

if [ -z "${{ env.TAGS }}" ]; then

echo "TAGS environment variable is not set. Please set it before running this workflow."

exit 1

fi

- name: Build and Push Multi-Arch Docker Image

uses: docker/build-push-action@v5

with:

context: .

platforms: linux/amd64,linux/arm64

tags: ${{ env.TAGS }},ziadmmh/goviolin:latest

push: true

- name: Set Enviroment Variables

run: |

echo "REPOSITORY=ZiadMansourM/terraformed-odyssey" >> $GITHUB_ENV

echo "BRANCH_NAME=update-goviolin-image-$RANDOM" >> $GITHUB_ENV

- name: Checkout Code

uses: actions/checkout@v4

with:

repository: ${{ env.REPOSITORY }}

token: ${{ secrets.GH_CLI_TOKEN }}

ref: main

path: terraformed-odyssey

- name: Checkout Branch and Update Image Tag

working-directory: terraformed-odyssey/kubernetes/goviolin

run: |

git checkout -b "${{ env.BRANCH_NAME }}"

rm -rf live && mkdir -p live

kustomize edit set image ziadmmh/goviolin:${{ env.TAG_VERSION }}

kustomize build > live/live.yaml

- name: Update Image Tag and Send pull-request

working-directory: terraformed-odyssey/kubernetes/goviolin

run: |

git config user.name "github-actions[bot]"

git config user.email "41898282+github-actions[bot]@users.noreply.github.com"

git status

git add live/live.yaml

git commit -m "Update goviolin image tag to ${{ env.TAGS }}"

git push origin ${{ env.BRANCH_NAME }}

- name: Create Pull Request

working-directory: terraformed-odyssey/kubernetes/goviolin

run: |

echo "${{ secrets.GH_CLI_TOKEN }}" > token.txt

gh auth login --with-token < token.txt

gh pr create \

--title "Update goviolin image tag to ${{ env.TAGS }}" \

--body "This PR updates the goviolin image tag to ${{ env.TAGS }}." \

--base "main" \

--head "${{ env.BRANCH_NAME }}"

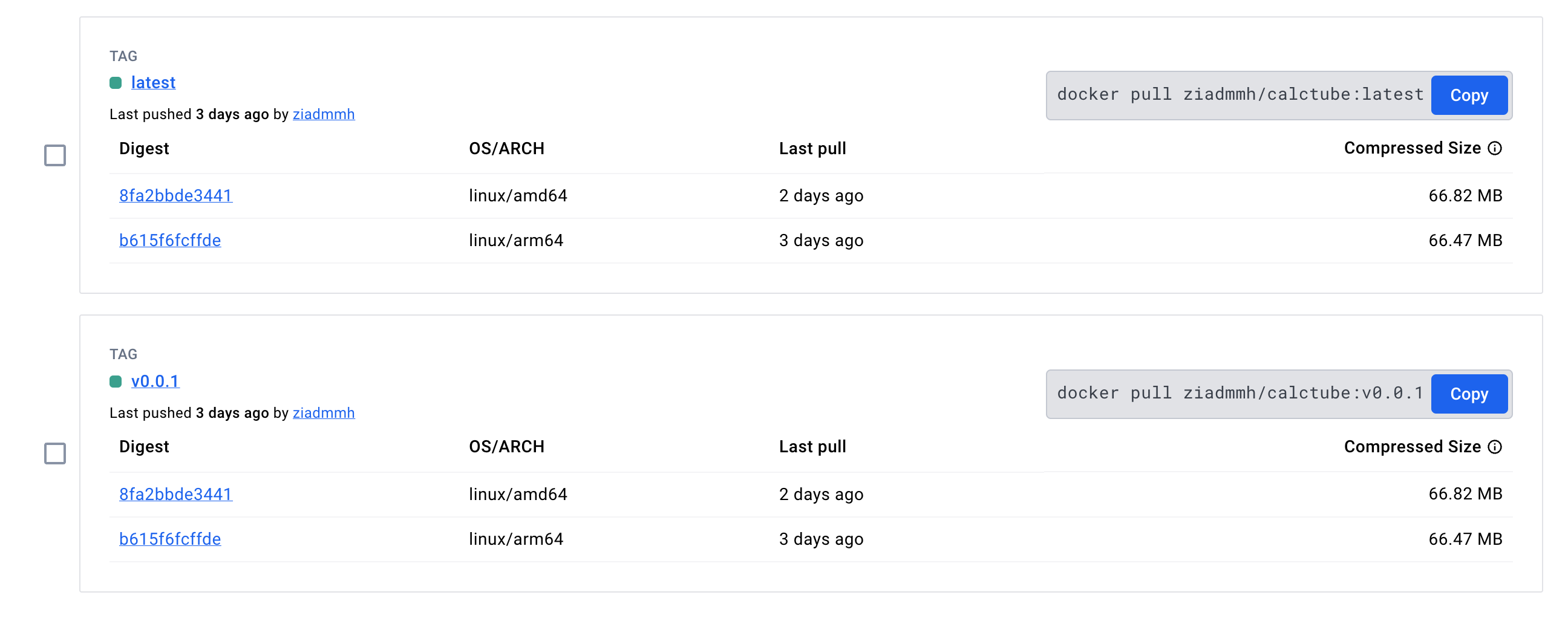

CalcTube

I built this app to help me during my final exams. It calculates the time needed to watch a playlist on youtube. You just need to provide the playlist URL or Id and it returns the watch time corresponding to each speed.

Code

The code is super straight forward and easy to understand. It uses the pytube library to interact with the YouTube API. Have a look and please reach out if you have any question ^^.

Click to expand

from concurrent.futures import ThreadPoolExecutor

import re

import time

from fastapi import FastAPI, Request

from fastapi.responses import JSONResponse, HTMLResponse, FileResponse

from fastapi.templating import Jinja2Templates

from pydantic import BaseModel

from pytube import Playlist, YouTube

app = FastAPI()

templates = Jinja2Templates(directory="templates")

@app.get("/assets/{filename}")

async def read_item(filename: str):

return FileResponse(f"templates/assets/{filename}")

def timeit(func):

def wrapper(*args, **kwargs):

print(f"Started {func.__name__}...")

start_time = time.time()

result = func(*args, **kwargs)

end_time = time.time()

print(f"Done {func.__name__} took {(end_time - start_time)*1000:.2f} ms to execute.")

return result

return wrapper

def get_playlist_id(link: str) -> str:

video_pattern = r'(https?://)?(www\.)?(youtube\.com/watch\?v=)?([a-zA-Z0-9_-]+)&?list=([a-zA-Z0-9_-]+)'

if match := re.match(video_pattern, link):

return match[5]

playlist_pattern = r'(https?://)?(www\.)?(youtube\.com/playlist\?list=)?([a-zA-Z0-9_-]+)'

return match[4] if (match := re.match(playlist_pattern, link)) else link

def get_video_length(url: str) -> int:

yt = YouTube(url)

return yt.length

def get_playlist_duration(playlist_url: str) -> tuple[int, int, float]:

playlist_id = get_playlist_id(playlist_url)

playlist = Playlist(f"https://www.youtube.com/playlist?list={playlist_id}")

video_count = len(playlist.video_urls)

total_seconds = 0

with ThreadPoolExecutor(max_workers=video_count) as executor:

total_seconds = sum(executor.map(get_video_length, playlist.video_urls))

avg_video_length = total_seconds / video_count if video_count != 0 else 0

return total_seconds, video_count, avg_video_length

def calculate_speed_times(total_seconds: int) -> dict:

speeds = [1, 1.25, 1.5, 1.75, 2]

times = {}

for speed in speeds:

time_at_speed = total_seconds / speed

hours = int(time_at_speed // 3600)

minutes = int((time_at_speed % 3600) // 60)

seconds = int(time_at_speed % 60)

times[speed] = f"{hours} hours, {minutes} minutes, {seconds} seconds"

return times

def format_time(seconds: float) -> str:

hours = int(seconds // 3600)

minutes = int((seconds % 3600) // 60)

seconds = int(seconds % 60)

return f"{hours} hours, {minutes} minutes, {seconds} seconds"

@timeit

def run(user_input: str):

total_seconds, video_count, avg_video_length = get_playlist_duration(user_input)

times = calculate_speed_times(total_seconds)

return {

"videoCount": video_count,

"avgVideoLength": format_time(avg_video_length),

"speedTimes": dict(times.items()),

}

@app.get("/", response_class=HTMLResponse)

async def read_item(request: Request):

print("Here")

return templates.TemplateResponse("index.html", {"request": request})

class PlaylistUrl(BaseModel):

playlistUrl: str

@app.post("/calculate")

async def calculate_playlist_duration(playlist_url: PlaylistUrl):

print("there")

response_data = run(playlist_url.playlistUrl)

return JSONResponse(content=response_data)

Dockerfile

It is actually advised to use requirements.txt file to install the dependencies. But for the sake of simplicity I will hard code the dependencies install directly in the Dockerfile without any version pinning.

FROM python:3.11-slim

WORKDIR /app

RUN pip install --no-cache-dir fastapi

RUN pip install --no-cache-dir uvicorn

RUN pip install --no-cache-dir pytube

RUN pip install --no-cache-dir jinja2

COPY main.py /app/

COPY templates /app/templates

EXPOSE 80

LABEL org.opencontainers.image.tags="ziadmmh/calctube:v0.0.1"

LABEL org.opencontainers.image.authors="ziadmansour.4.9.2000@gmail.com"

CMD ["uvicorn", "main:app", "--host", "0.0.0.0", "--port", "80"]

GitHub Actions

Same as the GoViolin app, we will use a dummy GitHub Actions workflow that builds, extracts the image labels from Dockerfile, and then pushes the image to Docker Hub CalcTube Repository.

Click to expand

name: Test, Build, and Push Multi-Arch Image

on:

push:

branches:

- main

workflow_dispatch:

env:

TAGS:

TAG_VERSION:

REPOSITORY:

BRANCH_NAME:

permissions: write-all

jobs:

test-and-build:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- name: Set up QEMU

uses: docker/setup-qemu-action@v3

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@v3

- name: Login to Docker Hub

uses: docker/login-action@v3

with:

username: ${{ secrets.DOCKERHUB_USERNAME }}

password: ${{ secrets.DOCKERHUB_TOKEN }}

- name: Extract metadata from Dockerfile

run: |

echo "TAGS=$(awk '/^LABEL org.opencontainers.image.tags/{gsub(/"/,"",$2); gsub(".*=",""); print }' Dockerfile)" >> $GITHUB_ENV

echo "TAG_VERSION=$(echo $TAGS | cut -d: -f2)" >> $GITHUB_ENV

- name: Check if TAGS is set

run: |

if [ -z "${{ env.TAGS }}" ]; then

echo "TAGS environment variable is not set. Please set it before running this workflow."

exit 1

fi

- name: Build and Push Multi-Arch Docker Image

uses: docker/build-push-action@v5

with:

context: .

platforms: linux/amd64,linux/arm64

tags: ${{ env.TAGS }},ziadmmh/calctube:latest

push: true

Just double check the image tags pushed and note the OS/ARCH supported.

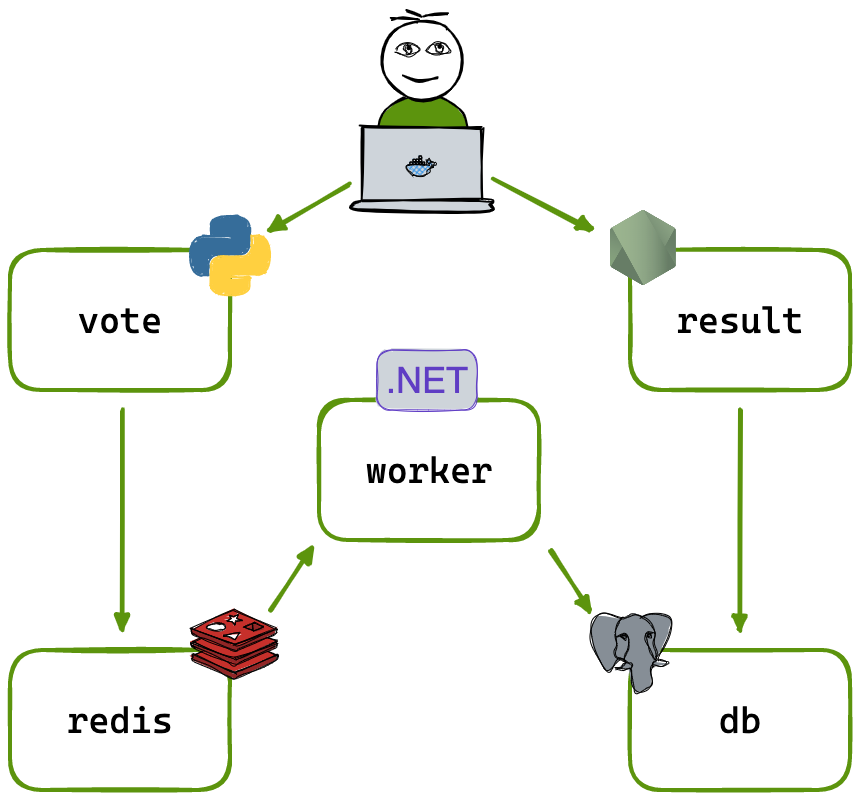

Voting App

The voting app is no different from the above setup. Please refer to the orginal repository and my fork of it for more information. And do reach out if you have any question ^^.

Plan

Each of your apps has its own Github repository, and github actions is responsible for building and pushing the images to Docker Hub. The images are then pulled by ArgoCD and deployed to the EKS cluster. So in total we aim for Four Repositories. The three repositories containing the code you can configure them as you like. But the terraform-odyssey repo which contains the applications manifests according to the GitOps principles. I have configured it as follows and that will affect how the ArgoCD will deploy the applications:

Click to expand

ziadh@Ziads-MacBook-Air terraformed-odyssey % tree

.

├── argocd

│ ├── app-of-apps

│ │ ├── calctube.yaml

│ │ ├── goviolin.yaml

│ │ ├── system.yaml

│ │ └── voting-app.yaml

│ └── root-app

│ └── root-app.yaml

├── kubernetes

│ ├── README.md

│ ├── calctube

│ │ ├── 00-namespace.yaml

│ │ ├── 01-deployment.yaml

│ │ ├── 02-service.yaml

│ │ ├── 03-ingress.yaml

│ │ ├── files

│ │ │ └── auth

│ │ ├── kustomization.yaml

│ │ └── live

│ │ └── live.yaml

│ ├── goviolin

│ │ ├── 00-namespace.yaml

│ │ ├── 01-deployment.yaml

│ │ ├── 02-service.yaml

│ │ ├── 03-ingress.yaml

│ │ ├── files

│ │ │ └── auth

│ │ ├── kustomization.yaml

│ │ └�── live

│ │ └── live.yaml

│ ├── system

│ │ ├── argocd-ingress.yaml

│ │ ├── argocd-notifications-cm.yaml

│ │ ├── components

│ │ │ └── sealed-secret-config.yaml

│ │ ├── dashboards

│ │ │ ├── argocd-14584.json

│ │ │ ├── cert-manager-20842.json

│ │ │ ├── ingress-nginx-14314.json

│ │ │ └── loki-14055.json

│ │ ├── kustomization.yaml

│ │ ├── live

│ │ │ └── live.yaml

│ │ ├── monitoring-ingress.yaml

│ │ ├── sealed-argocd-notifications-secret.yaml

│ │ └── secrets

│ │ └── argocd-notifications-secret-ignore.yaml

│ └── voting-app

│ ├── db-deployment.yaml

│ ├── db-service.yaml

│ ├── ingress.yaml

│ ├── kustomization.yaml

│ ├── live

│ │ └── live.yaml

│ ├── namespace.yaml

│ ├── redis-deployment.yaml

│ ├── redis-service.yaml

│ ├── result-deployment.yaml

│ ├── result-service.yaml

│ ├── vote-deployment.yaml

│ ├── vote-service.yaml

│ └── worker-deployment.yaml

└── terraform

├── 00_foundation

│ ├── 00-locals.tf

│ ├── 01-vpc.tf

│ ├── 02-igw.tf

│ ├── 03-subnets.tf

│ ├── 04-nat-gw-eip.tf

│ ├── 05-rt-rta.tf

│ ├── 06-eks.tf

│ ├── 07-node-group.tf

│ ├── providers.tf

│ ├── terraform.tfstate

│ ├── terraform.tfstate.backup

│ └── variables.tf

├── 10_platform

│ ├── 00-kube-prometheus-stack-loki.tf

│ ├── 01-ingress-nginx.tf

│ ├── 02-route53.tf

│ ├── 03-iam-oidc.tf

│ ├── 04-cert-manager.tf

│ ├── 05-sealed-secret.tf

│ ├── data.tf

│ ├── files

│ │ ├── cert-manager-values.yaml

│ │ ├── external-nginx-values.yaml

│ │ ├── internal-nginx-values.yaml

│ │ ├── kube-prometheus-stack-values.yaml

│ │ ├── loki-distributed-values.yaml

│ │ └── promtail-values.yaml

│ ├── outputs.tf

│ ├── providers.tf

│ ├── terraform.tfstate

│ ├── terraform.tfstate.backup

│ └── variables.tf

└── 15_platform

├── 00-argocd.tf

├── 01-vpn-acm.tf

├── 02-vpn-iam.tf

├── 03-vpn-sg.tf

├── 04-vpn-endpoint.tf

├── data.tf

├── files

│ └── argocd-values.yaml

├── metadata

│ ├── aws-client-vpn-self-service.xml

│ └── aws-client-vpn.xml

├── outputs.tf

├── providers.tf

├── terraform.tfstate

├── terraform.tfstate.backup

└── variables.tf

24 directories, 89 files

We will go through each file in details, but for a quick overview:

terraformdirectory contains the terraform code to provision the EKS cluster and the needed resources.kubernetesdirectory contains the manifests for the applications we will deploy.argocddirectory contains the ArgoCD manifests for the applications and the ArgoCD itself.

Now lets discuss the contents of the terraform directory.

00_Foundation Layer

VPC.Internet Gw.Subnets.Elastic IPs.NAT Gateways.Route Tables,Route Tables Association.eks-cluster-role,eks-cluster-role-attachmentthenEKS Cluster.eks-node-group-general-roleand its Three differenteks-node-group-general-role-attachment. Thenaws_eks_node_group.

10_Platform Layer

- Kube Prometheus Stack and Loki.

- Ingress Nginx Controllers.

- Route53 Split Horizon DNS.

- Cert-Manager.

- Sealed Secrets.

15_Platform Layer

- ArgoCD.

- VPN.

Pre-requisites

First make sure you downloaded aws-cli and created terraform user with programmatic access from the AWS Console.

AWS CLI

Follow the following link to download the latest aws-cli version compatible with your operating system:

aws --version

aws-cli/2.15.38 Python/3.11.8 Darwin/23.4.0 exe/x86_64 prompt/off

The AWS CLI version 2 is the most recent major version of the AWS CLI and supports all of the latest features. Some features introduced in version 2 are NOT backported to version 1 and you must upgrade to access those features.

Terraform User

- Open AWS Console then navigate to

IAMService. - Click on

UsersthenCreate User. - Name user

terraform. - Click

NextthenAdd user to groupand name itadmin-access-automated-tools. Attach theAdministratorAccesspolicy then clickCreate user group.Nextagain, and finallyCreate User. - Navigate to

terraformuser and selectSecurity Credentialstab. - Click

Create access keyand Select under use caseCommand Line Interface (CLI). - Read

Alternatives recommendedif you are okay check I understand and clickCreate. - Provide a description e.g.

Terraform Programmatic AccessthenCreate access key. - Download

.csvfile and store it in a safe place.

- Never store your access key in plain text, in a code repository, or in code.

- Disable or delete access key when no longer needed.

- Enable and stick to least-privilege permissions.

- Rotate access keys regularly.

- For more details about managing access keys, see the best practices for managing AWS access keys.

cat $PATH_TO_CREDENTIALS_FILE/terraform_accessKeys.csv

# Enter region: eu-central-1

# Enter output format: json

aws configure --profile terraform

# To verify

cat ~/.aws/config

cat ~/.aws/credentials

00_Foundation

In this section we will provision the VPC, Internet GW, Subnets, Elastic IPs, NAT Gateway, Route Tables, EKS Cluster, EKS Node Groups, and IAM roles and policies needed.

Note that provisioning the 00_foundation took from me:

- ~12 minutes to apply.

- ~11 minutes to destroy.

We will be using the following Terraform providers:

Variables

variable "region" {

description = "The AWS region to deploy the resources."

type = string

default = "eu-central-1"

}

variable "profile" {

description = "The AWS profile to use."

type = string

default = "terraform"

}

variable "aws_vpc_main_cidr" {

description = "The CIDR block of the main VPC."

type = string

default = "10.0.0.0/16"

}

variable "cluster_name" {

description = "The name of the EKS cluster."

type = string

default = "eks-cluster-production"

}

variable "eks_master_version" {

description = "The Kubernetes version of the EKS cluster."

type = string

default = "1.28"

}

variable "worker_nodes_k8s_version" {

description = "The Kubernetes version of the EKS worker nodes."

type = string

default = "1.28"

}

variable "node_group_scaling_config" {

description = "The scaling configuration for the EKS node group."

type = object({

desired_size = number

max_size = number

min_size = number

})

default = {

desired_size = 4

max_size = 4

min_size = 4

}

}

Providers

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "5.45.0"

}

}

}

provider "aws" {

region = var.region

profile = var.profile

}

Disclaimer

Because the main.tf file is a bit lengthy, I will break it here to be easier for me to comment and provide addition resources for every related resources.

A better approach would have been grouping related resources in different

.tffiles. But for the sake of simplicity I didn't do it.

Update: I have updated the code and article to reflect the best practices.

Local variable

locals {

tags = {

author = "ziadh"

"karpenter.sh/discovery" = var.cluster_name

}

}

Craete VPC

You can see more at:

- aws_vpc terraform Resource.

- EKS Network Requirements documentation.

I choose the cidr block to be 10.0.0.0/16 you can choose them as per your convenience. You can visualize the subnets using Subnet Calculator. Also remember:

| Prefix | First Ip Address | Last Ip Address | Number of Addresses |

|---|---|---|---|

| 10.0.0.0/8 | 10.0.0.0 | 10.255.255.255 | 16,777,216 |

| 172.16.0.0/12 | 172.16.0.0 | 172.31.255.255 | 1,048,576 |

| 192.168.0.0/16 | 192.168.0.0 | 192.168.255.255 | 65,536 |

resource "aws_vpc" "main" {

cidr_block = var.aws_vpc_main_cidr

# Makes instances shared on the host.

instance_tenancy = "default"

# Required for EKS:

# 1. Enable DNS support in the VPC.

# 2. Enable DNS hostnames in the VPC.

enable_dns_support = true

enable_dns_hostnames = true

# Additional Arguments:

assign_generated_ipv6_cidr_block = false

tags = merge(local.tags, { Name = "eks-vpc" })

}

Create Internet Gateway

- aws_internet_gateway terraform Resource.

resource "aws_internet_gateway" "main" {

vpc_id = aws_vpc.main.id

tags = merge(local.tags, { Name = "eks-igw" })

}

Subnets

We need two public and two private subnets. Read more here.

- aws_subnet terraform Resource.

- Visual Subnet Calculator.

- Public Subnet 1

- Public Subnet 2

- Private Subnet 1

- Private Subnet 2

resource "aws_subnet" "public_1" {

vpc_id = aws_vpc.main.id

cidr_block = "10.0.0.0/18"

availability_zone = "${var.region}a"

# Required for EKS: Instances launched into the subnet

# should be assigned a public IP address.

map_public_ip_on_launch = true

tags = merge(

local.tags,

{

Name = "public-${var.region}a"

"kubernetes.io/cluster/${var.cluster_name}" = "shared"

"kubernetes.io/role/elb" = "1"

}

)

}

resource "aws_subnet" "public_2" {

vpc_id = aws_vpc.main.id

cidr_block = "10.0.64.0/18"

availability_zone = "${var.region}b"

# Required for EKS: Instances launched into the subnet

# should be assigned a public IP address.

map_public_ip_on_launch = true

tags = merge(

local.tags,

{

Name = "public-${var.region}b"

"kubernetes.io/cluster/${var.cluster_name}" = "shared"

"kubernetes.io/role/elb" = "1"

}

)

}

resource "aws_subnet" "private_1" {

vpc_id = aws_vpc.main.id

cidr_block = "10.0.128.0/18"

availability_zone = "${var.region}a"

tags = merge(

local.tags,

{

Name = "private-${var.region}a"

"kubernetes.io/cluster/${var.cluster_name}" = "shared"

"kubernetes.io/role/internal-elb" = "1"

}

)

}

resource "aws_subnet" "private_2" {

vpc_id = aws_vpc.main.id

cidr_block = "10.0.192.0/18"

availability_zone = "${var.region}b"

tags = merge(

local.tags,

{

Name = "private-${var.region}b"

"kubernetes.io/cluster/${var.cluster_name}" = "shared"

"kubernetes.io/role/internal-elb" = "1"

}

)

}

Pay a close attention to:

- The

"kubernetes.io/role/elb"tag we had on public subnets vs"kubernetes.io/role/internal-elb". Read more on the docs here. - The

map_public_ip_on_launch = trueon public subnets ONLY. - Without this tag

"kubernetes.io/cluster/${var.cluster_name}"the EKS cluster will not be able to communicate with the nodes.

Elastic IPs and NAT GWs

- aws_eip terraform Resource.

- aws_nat_gateway terraform Resource.

- Elastic IP and NAT Gw One

- Elastic IP and NAT Gw Two

resource "aws_eip" "nat_1" {

depends_on = [aws_internet_gateway.main]

}

resource "aws_nat_gateway" "gw_1" {

subnet_id = aws_subnet.public_1.id

allocation_id = aws_eip.nat_1.id

tags = merge(local.tags, { Name = "eks-nat-gw-1" })

}

resource "aws_eip" "nat_2" {

depends_on = [aws_internet_gateway.main]

}

resource "aws_nat_gateway" "gw_2" {

subnet_id = aws_subnet.public_2.id

allocation_id = aws_eip.nat_2.id

tags = merge(local.tags, { Name = "eks-nat-gw-2" })

}

RT and RTA

Route Tables and Route Tables Association section:

- aws_route_table terraform Resource.

- aws_route_table_association terraform Resource.

We will have three route tables and then associate each one of the four subnets with the appropriate route table.

- Public Route Table

- Private Route Table One

- Private Route Table Two

resource "aws_route_table" "public" {

vpc_id = aws_vpc.main.id

route {

cidr_block = "0.0.0.0/0"

gateway_id = aws_internet_gateway.main.id

}

tags = merge(local.tags, { Name = "eks-public-rt" })

}

resource "aws_route_table" "private_1" {

vpc_id = aws_vpc.main.id

route {

cidr_block = "0.0.0.0/0"

nat_gateway_id = aws_nat_gateway.gw_1.id

}

tags = merge(local.tags, { Name = "eks-private-rt-1" })

}

resource "aws_route_table" "private_2" {

vpc_id = aws_vpc.main.id

route {

cidr_block = "0.0.0.0/0"

nat_gateway_id = aws_nat_gateway.gw_2.id

}

tags = merge(local.tags, { Name = "eks-private-rt-2" })

}

And there respective associations:

resource "aws_route_table_association" "public_1" {

subnet_id = aws_subnet.public_1.id

route_table_id = aws_route_table.public.id

}

resource "aws_route_table_association" "public_2" {

subnet_id = aws_subnet.public_2.id

route_table_id = aws_route_table.public.id

}

resource "aws_route_table_association" "private_1" {

subnet_id = aws_subnet.private_1.id

route_table_id = aws_route_table.private_1.id

}

resource "aws_route_table_association" "private_2" {

subnet_id = aws_subnet.private_2.id

route_table_id = aws_route_table.private_2.id

}

IAM roles for EKS

- aws_iam_role terraform Resource.

- aws_iam_role_policy_attachment terraform Resource.

Note that we will attach the role to the AmazonEKSClusterPolicy policy, it is managed by aws. And the assume_role_policy is responsible on who can assume this role.

This role is used by the EKS control plane to make calls to AWS API operations on your behalf.

resource "aws_iam_role" "eks_cluster" {

name = "eks-cluster"

assume_role_policy = <<POLICY

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "eks.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

POLICY

}

resource "aws_iam_role_policy_attachment" "amazon_eks_cluster_policy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSClusterPolicy"

role = aws_iam_role.eks_cluster.name

}

EKS Cluster

- aws_eks_cluster terraform Resource.

resource "aws_eks_cluster" "eks" {

name = var.cluster_name

# Amazon Resource Name (ARN) of the IAM role that provides permission for

# the kubernetes control plane to make calls to aws API operations on your

# behalf.

role_arn = aws_iam_role.eks_cluster.arn

# Desired Kubernetes master version

version = "1.28"

vpc_config {

endpoint_private_access = false

endpoint_public_access = true

# Must be in at least two subnets in two different

# availability zones.

subnet_ids = [

aws_subnet.public_1.id,

aws_subnet.public_2.id,

aws_subnet.private_1.id,

aws_subnet.private_2.id

]

}

depends_on = [

aws_iam_role_policy_attachment.amazon_eks_cluster_policy

]

tags = local.tags

}

IAM roles for NodeGroups

We will create a role named eks-node-group-general and then attach three policies to that role:

Also we control who can assume the eks-node-group-general by the assume_role_policy below. Which is the EKS worker nodes that will assume this role.

In case you were wondering why we need these policies, please follow the docs here and the above links to know exactly what each policy gives permission to.

resource "aws_iam_role" "node_group_general" {

name = "eks-node-group-general"

assume_role_policy = <<POLICY

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "ec2.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

POLICY

}

- AmazonEKSWorkerNodePolicy

- AmazonEKS_CNI_Policy

- AmazonEC2ContainerRegistryReadOnly

resource "aws_iam_role_policy_attachment" "amazon_eks_worker_node_policy_general" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSWorkerNodePolicy"

role = aws_iam_role.node_group_general.name

}

resource "aws_iam_role_policy_attachment" "amazon_eks_cni_policy_general" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKS_CNI_Policy"

role = aws_iam_role.node_group_general.name

}

resource "aws_iam_role_policy_attachment" "amazon_ec2_container_registry_read_only_general" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEC2ContainerRegistryReadOnly"

role = aws_iam_role.node_group_general.name

}

EKS NodeGroup

- aws_eks_node_group terraform Resource.

resource "aws_eks_node_group" "nodes_general" {

cluster_name = aws_eks_cluster.eks.name

node_group_name = "nodes-general-group"

node_role_arn = aws_iam_role.node_group_general.arn

# Identifiers of EC2 subnets to associate with the EKS Node Group.

# These subnets must have the following resource tags:

# - kubernetes.io/cluster/CLUSTER_NAME

# Where CLUSTER_NAME is replaced with the name of the EKS cluster.

subnet_ids = [

aws_subnet.private_1.id,

aws_subnet.private_2.id

]

scaling_config {

desired_size = var.node_group_scaling_config.desired_size

max_size = var.node_group_scaling_config.max_size

min_size = var.node_group_scaling_config.min_size

}

# Valid Values: AL2_x86_64, BOTTLEROCKET_x86_64

# Ref: https://docs.aws.amazon.com/eks/latest/APIReference/API_Nodegroup.html#API_Nodegroup_Contents

ami_type = "BOTTLEROCKET_x86_64"

# Valid Values: ON_DEMAND, SPOT

capacity_type = "ON_DEMAND"

disk_size = 20 # GiB

# Force version update if existing Pods are unable to be drained

# due to a pod disruption budget issue.

force_update_version = false

# Docs: https://aws.amazon.com/ec2/instance-types/

instance_types = ["t3.medium"]

labels = {

role = "nodes-general"

}

# If not specified, then inherited from the EKS master plane.

version = "1.28"

depends_on = [

aws_iam_role_policy_attachment.amazon_eks_worker_node_policy_general,

aws_iam_role_policy_attachment.amazon_eks_cni_policy_general,

aws_iam_role_policy_attachment.amazon_ec2_container_registry_read_only_general

]

tags = local.tags

}

scaling_config {

desired_size = 2

max_size = 2

min_size = 2

}

We can not have less than 2 worker nodes in the EKS cluster. As we will add a PodAntiAffinity rule to the ingress-nginx controller. More later on this.

Test & Verify

terraform fmt

terraform init

terraform validate

terraform plan

terraform apply

rm ~/.kube/config # (Optional)

aws eks --region eu-central-1 update-kubeconfig --name eks-cluster-production --profile terraform

kubectl get nodes,svc

10_Platform

In this section we will provision:

- Kube Prometheus Stack and Loki.

- Two Ingress Nginx Controllers.

- Route53 with split horizon dns.

- Cert-Manager.

- Sealed Secrets.

Provisioning the 10_platform took from me:

- ~4 minutes to apply.

- ~2 minutes to destroy.

Vars

variable "region" {

description = "The AWS region to deploy the resources."

type = string

default = "eu-central-1"

}

variable "profile" {

description = "The AWS profile to use."

type = string

default = "terraform"

}

variable "cluster_name" {

description = "The name of the EKS cluster."

type = string

default = "eks-cluster-production"

}

Providers

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "5.45.0"

}

kubernetes = {

source = "hashicorp/kubernetes"

version = "2.29.0"

}

kubectl = {

source = "gavinbunney/kubectl"

version = "1.14.0"

}

helm = {

source = "hashicorp/helm"

version = "2.13.0"

}

tls = {

source = "hashicorp/tls"

version = "4.0.5"

}

}

}

provider "aws" {

region = var.region

profile = var.profile

}

provider "kubernetes" {

host = data.aws_eks_cluster.cluster.endpoint

token = data.aws_eks_cluster_auth.cluster.token

cluster_ca_certificate = base64decode(data.aws_eks_cluster.cluster.certificate_authority.0.data)

}

provider "helm" {

kubernetes {

host = data.aws_eks_cluster.cluster.endpoint

token = data.aws_eks_cluster_auth.cluster.token

cluster_ca_certificate = base64decode(data.aws_eks_cluster.cluster.certificate_authority.0.data)

}

}

provider "kubectl" {

host = data.aws_eks_cluster.cluster.endpoint

token = data.aws_eks_cluster_auth.cluster.token

cluster_ca_certificate = base64decode(data.aws_eks_cluster.cluster.certificate_authority.0.data)

load_config_file = false

}

Helm Intro

We will use terraform but I wanted to show you how to install them with helm.

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

helm repo update

helm search repo kube-prometheus-stack --max-col-width 23

# Release name: monitoring

# Helm chart name: kube-prometheus-stack

helm install monitoring prometheus-community/kube-prometheus-stack \

--values prometheus-values.yaml \

--version 58.1.3 \

--namespace monitoring \

--create-namespace

# Later when you are done

helm uninstall monitoring -n monitoring

helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

helm repo update

helm search repo ingress-nginx --max-col-width 23

helm install ingress-nginx ingress-nginx/ingress-nginx \

--values ingress-values.yaml \

--version 4.10.0 \

--namespace ingress-nginx \

--create-namespace

# Later when you are done

helm uninstall ingress-nginx -n ingress-nginx

helm repo add jetstack https://charts.jetstack.io

helm repo update

helm search repo cert-manager --max-col-width 23

helm install cert-manager jetstack/cert-manager \

--values cert-manager-values.yaml \

--version 1.14.4 \

--namespace cert-manager \

--create-namespace

# Later when you are done

helm uninstall cert-manager -n cert-manager

Draft Plan

The following is just us drafting the plan, as we will use Terraform not the UI. Do not worry if you did not understand a certain part we are just planning. We will go into details later:

- Delegate a subdomain to Route53.

*.k8s.sreboy.com.- Create a public hosted zone in Route53.

- Domain Name:

k8s.sreboy.com.

- Domain Name:

- Create a

nameserver - (NS)record in your domain register e.g. Namecheap. - (Optional) Test subdomain delegation with a dummy

test.k8s.sreboy.comin Route53 and try to resolve it withdig +short test.k8s.sreboy.com. Value can be anything:10.10.10.10. You can also use this tool to see DNS propagation whatsmydns.

- Create a public hosted zone in Route53.

- We will use IRSA: IAM Roles for Service Accounts to allow the

cert-managerto manage theRoute53hosted zone.- Create OpenID Connect Provider first:

- Open eks service in AWS Console. Then under clusters select the cluster.

- Under

Configurationtab, Copy theOpenID Connect Provider URL. - Navigate to IAM Service then

Identity Providers. SelectAdd provider. - Select

OpenID Connect, paste url andGet thumbprint. - Under Audience:

sts.amazonaws.com. - Click

Add provider.

- Create an IAM policy. Name the policy

CertManagerRoute53Access:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "route53:GetChange",

"Resource": "arn:aws:route53:::change/*"

},

{

"Effect": "Allow",

"Action": [

"route53:ChangeResourceRecordSets",

"route53:ListResourceRecordSets"

],

"Resource": "arn:aws:route53:::hostedzone/<id>"

}

]

}-

Craete an IAM role and associate it with the kubernetes service account. Under

RolesclickCreate role.- Select type of trusted entity to be

Web identity. - Choose the identity provider created in step 1.

- For Audience:

sts.amazonaws.com. - Click next for permissions and attach

CertManagerRoute53Accesspolicy. - Name the role

cert-manager-acme.

- Select type of trusted entity to be

-

To allow only our cert-manager kubernetes account to assume this role, we need to update

Trust Relationshipof thecert-manager-acmerole. Click edit Trust Relationships:- First we need the name of the service account attached to the cert-manager.

- Run

kubectl -n cert-manager get sa cert-managercalledcert-083-cert-manager. - Update the trust relationship to be:

- Before

- After

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Federated": <OIDC_PROVIDER_ARN>

},

"Action": "sts:AssumeRoleWithWebIdentity",

"Condition": {

"StringEquals": {

"oidc.eks.eu-central-1.amazonaws.com/id/<CLUSTER_ID>:aud": "sts.amazonaws.com"

}

}

}

]

}{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Federated": <OIDC_PROVIDER_ARN>

},

"Action": "sts:AssumeRoleWithWebIdentity",

"Condition": {

"StringEquals": {

"oidc.eks.eu-central-1.amazonaws.com/id/<CLUSTER_ID>:sub": "system:serviceaccount:cert-manager:cert-manager"

}

}

}

]

} -

Attach policy

CertManagerRoute53Accessto the rolecert-manager-acme. Remember the assume_role_policy created inside the role defines who can assume this role.

- Create OpenID Connect Provider first:

- Install

Kube Prometheus Stackwith customvalues.yamlfile. - Install

Ingress-Nginxwith customvalues.yamlfile. - Install

Cert-Managerwith customvalues.yamlfile. - Instal

Sealed-Secretwith customvalues.yamlfile.

Visualize Plan

The following is a Simplified Dependency Graph made by Mermaid.

Data

All needed data sources from previous layer. E.g. Use the aws_caller_identity data source to get the access to the effective Account ID, User ID, and ARN in which Terraform is authorized.

data "aws_eks_cluster" "cluster" {

name = var.cluster_name

}

data "aws_eks_cluster_auth" "cluster" {

name = var.cluster_name

}

# Data Source: aws_caller_identity

# https://registry.terraform.io/providers/hashicorp/aws/latest/docs/data-sources/caller_identity

data "aws_caller_identity" "current" {}

data "kubernetes_service" "external_nginx_controller" {

metadata {

name = "ingress-nginx-external-controller"

namespace = "ingress-nginx-external"

}

depends_on = [

helm_release.ingress-nginx-external

]

}

data "kubernetes_service" "internal_nginx_controller" {

metadata {

name = "ingress-nginx-internal-controller"

namespace = "ingress-nginx-internal"

}

depends_on = [

helm_release.ingress-nginx-internal

]

}

data "tls_certificate" "demo" {

url = data.aws_eks_cluster.cluster.identity.0.oidc.0.issuer

}

Providers

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "5.45.0"

}

kubernetes = {

source = "hashicorp/kubernetes"

version = "2.29.0"

}

kubectl = {

source = "gavinbunney/kubectl"

version = "1.14.0"

}

helm = {

source = "hashicorp/helm"

version = "2.13.0"

}

tls = {

source = "hashicorp/tls"

version = "4.0.5"

}

}

}

provider "aws" {

region = var.region

profile = var.profile

}

provider "kubernetes" {

host = data.aws_eks_cluster.cluster.endpoint

token = data.aws_eks_cluster_auth.cluster.token

cluster_ca_certificate = base64decode(data.aws_eks_cluster.cluster.certificate_authority.0.data)

}

provider "helm" {

kubernetes {

host = data.aws_eks_cluster.cluster.endpoint

token = data.aws_eks_cluster_auth.cluster.token

cluster_ca_certificate = base64decode(data.aws_eks_cluster.cluster.certificate_authority.0.data)

}

}

provider "kubectl" {

host = data.aws_eks_cluster.cluster.endpoint

token = data.aws_eks_cluster_auth.cluster.token

cluster_ca_certificate = base64decode(data.aws_eks_cluster.cluster.certificate_authority.0.data)

load_config_file = false

}

Variables

variable "region" {

description = "The AWS region to deploy the resources."

type = string

default = "eu-central-1"

}

variable "profile" {

description = "The AWS profile to use."

type = string

default = "terraform"

}

variable "cluster_name" {

description = "The name of the EKS cluster."

type = string

default = "eks-cluster-production"

}

Outputs

output "internal_nginx_dns_lb" {

description = "Internal DNS name for the NGINX Load Balancer."

value = data.kubernetes_service.internal_nginx_controller.status.0.load_balancer.0.ingress.0.hostname

}

output "ns_records" {

description = "The name servers for the public hosted zone"

value = aws_route53_zone.public.name_servers

}

output "external_nginx_dns_lb" {

description = "External DNS name for the NGINX Load Balancer."

value = data.kubernetes_service.external_nginx_controller.status.0.load_balancer.0.ingress.0.hostname

}

output "issuer_url_oidc" {

description = "Issuer URL for the OpenID Connect identity provider."

value = data.aws_eks_cluster.cluster.identity.0.oidc.0.issuer

}

output "issuer_url_oidc_replaced" {

description = "Issuer URL for the OpenID Connect identity provider without https://."

value = replace(data.aws_eks_cluster.cluster.identity.0.oidc.0.issuer, "https://", "")

}

Kube Prometheus Stack

- helm_release terraform Resource.

resource "helm_release" "kube_prometheus_stack" {

name = "monitoring"

namespace = "monitoring"

repository = "https://prometheus-community.github.io/helm-charts"

chart = "kube-prometheus-stack"

version = "58.1.3"

timeout = 300

atomic = true

create_namespace = true

values = [

"${file("files/kube-prometheus-stack-values.yaml")}"

]

}

resource "helm_release" "loki-distributed" {

name = "loki"

namespace = "monitoring"

repository = "https://grafana.github.io/helm-charts"

chart = "loki-distributed"

version = "0.79.0"

timeout = 300

atomic = true

create_namespace = true

values = [

"${file("files/loki-distributed-values.yaml")}"

]

depends_on = [helm_release.kube_prometheus_stack]

}

resource "helm_release" "promtail" {

name = "promtail"

namespace = "monitoring"

repository = "https://grafana.github.io/helm-charts"

chart = "promtail"

version = "6.15.5"

timeout = 300

atomic = true

create_namespace = true

values = [

"${file("files/promtail-values.yaml")}"

]

}

Custom values.yaml

I provided inline comments explaining each value customized in the kube-prometheus-stack-values.yaml file.

Click Me (kube-prometheus-stack-values.yaml)

---

# Ref: https://github.com/prometheus-community/helm-charts/blob/main/charts/kube-prometheus-stack/values.yaml

# Since we are using eks. The control plane is abstracted away from us.

# We do NOT need to manage ETCD, scheduler, controller-manager, and API server.

# The following will disable alerts for etcd and kube-scheduler.

defaultRules:

rules:

etcd: false

kubeScheduler: false

# Then disable servicemonitors for them

kubeControllerManager:

enabled: false

kubeScheduler:

enabled: false

kubeEtcd:

enabled: false

# Add a custom labels to discover ServiceMonitors

prometheus:

prometheusSpec:

## If true, a nil or {} value for prometheus.prometheusSpec.serviceMonitorSelector will cause the

## prometheus resource to be created with selectors based on values in the helm deployment,

## which will also match the servicemonitors created

##

serviceMonitorSelectorNilUsesHelmValues: false

serviceMonitorSelector: {}

# matchLabels:

# Prometheus will watch servicemonitors objects

# with the following label:

# e.g. app.kubernetes.io/monitored-by: prometheus

# prometheus: monitor

serviceMonitorNamespaceSelector: {}

# matchLabels:

# By default, prometheus will ONLY detect servicemonitors

# in its own namespace `monitoring`. Instruct prometheus

# to select service monitors in all namespaces with the

# following label:

# e.g. app.kubernetes.io/part-of: prometheus

# monitoring: prometheus

# Last thing update common labels.

# If you did NOT add it. Prometheus Operator

# will IGNORE default service monitors created

# by this helm chart. Consequently, the prometheus

# targets section will be empty.

# commonLabels:

# prometheus: monitor

# monitoring: prometheus

# Optionally, you can update the grafana admin password

grafana:

adminPassword: testing321

additionalDataSources:

- name: Loki

type: loki

url: http://loki-loki-distributed-query-frontend.monitoring:3100

Click Me (loki-distributed-values.yaml)

---

# Ref: https://github.com/grafana/helm-charts/blob/main/charts/loki-distributed/values.yaml

loki:

serviceMonitor:

enabled: true

Click Me (promtail-values.yaml)

---

# Ref: https://github.com/grafana/helm-charts/blob/main/charts/promtail/values.yaml

config:

clients:

- url: "http://loki-loki-distributed-gateway/loki/api/v1/push"

Ingress Nginx

- External Ingress

- Internal Ingress

resource "helm_release" "ingress-nginx-external" {

name = "ingress-nginx-external"

namespace = "ingress-nginx-external"

repository = "https://kubernetes.github.io/ingress-nginx"

chart = "ingress-nginx"

version = "4.0.1"

timeout = 300

atomic = true

create_namespace = true

depends_on = [

helm_release.kube_prometheus_stack

]

values = [

"${file("files/external-nginx-values.yaml")}"

]

}

resource "helm_release" "ingress-nginx-internal" {

name = "ingress-nginx-internal"

namespace = "ingress-nginx-internal"

repository = "https://kubernetes.github.io/ingress-nginx"

chart = "ingress-nginx"

version = "4.0.1"

timeout = 300

atomic = true

create_namespace = true

depends_on = [

helm_release.kube_prometheus_stack

]

values = [

"${file("files/internal-nginx-values.yaml")}"

]

}

Click Me (external-nginx-values.yaml)

---

# Ref: https://github.com/kubernetes/ingress-nginx/blob/main/charts/ingress-nginx/values.yaml

controller:

# name: controller

# -- Election ID to use for status update, by default it uses the controller name combined with a suffix of 'leader'

# electionID: ""

config:

# https://github.com/kubernetes/ingress-nginx/blob/main/docs/user-guide/nginx-configuration/configmap.md#compute-full-forwarded-for

compute-full-forwarded-for: "true"

# https://github.com/kubernetes/ingress-nginx/blob/main/docs/user-guide/nginx-configuration/configmap.md#use-forwarded-headers

use-forwarded-headers: "true"

# https://github.com/kubernetes/ingress-nginx/blob/main/docs/user-guide/nginx-configuration/configmap.md#proxy-body-size

proxy-body-size: "0"

# This name we will reference this particular ingress controller

# incase you have multiple ingress controllers, you can use

# `ingressClassName` to specify which ingress controller to use.

# ALSO: For backwards compatibility with ingress.class annotation, use ingressClass. Algorithm is as follows, first ingressClassName is considered, if not present, controller looks for ingress.class annotation.

# Ref: https://github.com/kubernetes/ingress-nginx/tree/main/charts/ingress-nginx

# E.g. very often we have `internal` and `external` ingresses in the same cluster.

ingressClass: external-nginx

# New kubernetes APIs starting from 1.18 let us create an ingress class resource

ingressClassResource:

name: external-nginx

# ENABLED: Create the IngressClass or not

enabled: true

# DEFAULT: If true, Ingresses without ingressClassName get assigned to this IngressClass on creation. Ingress creation gets rejected if there are multiple default IngressClasses. Ref: https://kubernetes.io/docs/concepts/services-networking/ingress/#default-ingress-class

default: false

# Ref: https://kubernetes.github.io/ingress-nginx/user-guide/multiple-ingress/#using-ingressclasses

controllerValue: "k8s.io/ingress-nginx-external"

# Pod Anti-Affinity Role: deploys nginx ingress pods on a different nodes

# very helpful if you do NOT want to disrupt services during kubernetes rolling

# upgrades.

# IMPORTANT: try always to use it.

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app.kubernetes.io/name

operator: In

values:

- ingress-nginx

topologyKey: "kubernetes.io/hostname"

# Should at least be 2 or configured auto-scaling

replicaCount: 1

# Admission webhooks: verifies the configuration before applying the ingress.

# E.g. syntax error in the configuration snippet annotation, the generated

# configuration becomes invalid

admissionWebhooks:

enabled: true

# Ingress is always deployed with some kind of a load balancer. You may use

# annotations supported by your cloud provider to configure it. E.g. in AWS

# you can use `aws-load-balancer-type` as the default is `classic`.

service:

annotations:

# Ref: https://kubernetes-sigs.github.io/aws-load-balancer-controller/v2.2/guide/service/annotations/

service.beta.kubernetes.io/aws-load-balancer-name: "load-balancer-external"

service.beta.kubernetes.io/aws-load-balancer-type: nlb

# service.beta.kubernetes.io/aws-load-balancer-cross-zone-load-balancing-enabled: "true"

# We want to enable prometheus metrics on the controller

metrics:

enabled: true

serviceMonitor:

enabled: true

# additionalLabels:

# prometheus: monitor

Click Me (internal-nginx-values.yaml)

---

# Ref: https://github.com/kubernetes/ingress-nginx/blob/main/charts/ingress-nginx/values.yaml

controller:

# name: controller

# -- Election ID to use for status update, by default it uses the controller name combined with a suffix of 'leader'

# electionID: ""

config:

# https://github.com/kubernetes/ingress-nginx/blob/main/docs/user-guide/nginx-configuration/configmap.md#compute-full-forwarded-for

compute-full-forwarded-for: "true"

# https://github.com/kubernetes/ingress-nginx/blob/main/docs/user-guide/nginx-configuration/configmap.md#use-forwarded-headers

use-forwarded-headers: "true"

# https://github.com/kubernetes/ingress-nginx/blob/main/docs/user-guide/nginx-configuration/configmap.md#proxy-body-size

proxy-body-size: "0"

# This name we will reference this particular ingress controller

# incase you have multiple ingress controllers, you can use

# `ingressClassName` to specify which ingress controller to use.

# ALSO: For backwards compatibility with ingress.class annotation, use ingressClass. Algorithm is as follows, first ingressClassName is considered, if not present, controller looks for ingress.class annotation.

# Ref: https://github.com/kubernetes/ingress-nginx/tree/main/charts/ingress-nginx

# E.g. very often we have `internal` and `external` ingresses in the same cluster.

ingressClass: internal-nginx

# New kubernetes APIs starting from 1.18 let us create an ingress class resource

ingressClassResource:

name: internal-nginx

# ENABLED: Create the IngressClass or not

enabled: true

# DEFAULT: If true, Ingresses without ingressClassName get assigned to this IngressClass on creation. Ingress creation gets rejected if there are multiple default IngressClasses. Ref: https://kubernetes.io/docs/concepts/services-networking/ingress/#default-ingress-class

default: true

# Ref: https://kubernetes.github.io/ingress-nginx/user-guide/multiple-ingress/#using-ingressclasses

controllerValue: "k8s.io/ingress-nginx-internal"

# Pod Anti-Affinity Role: deploys nginx ingress pods on a different nodes

# very helpful if you do NOT want to disrupt services during kubernetes rolling

# upgrades.

# IMPORTANT: try always to use it.

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app.kubernetes.io/name

operator: In

values:

- ingress-nginx

topologyKey: "kubernetes.io/hostname"

# Should at least be 2 or configured auto-scaling

replicaCount: 1

# Admission webhooks: verifies the configuration before applying the ingress.

# E.g. syntax error in the configuration snippet annotation, the generated

# configuration becomes invalid

admissionWebhooks:

enabled: true

# Ingress is always deployed with some kind of a load balancer. You may use

# annotations supported by your cloud provider to configure it. E.g. in AWS

# you can use `aws-load-balancer-type` as the default is `classic`.

service:

external:

enabled: false

internal:

enabled: true

annotations:

# Ref: https://kubernetes-sigs.github.io/aws-load-balancer-controller/v2.2/guide/service/annotations/

# if you want to have an internal load balancer with only private

# IP address. That you can use within your VPC. you can use:

# Ref: https://docs.aws.amazon.com/elasticloadbalancing/latest/userguide/how-elastic-load-balancing-works.html

service.beta.kubernetes.io/aws-load-balancer-type: nlb

service.beta.kubernetes.io/aws-load-balancer-name: "load-balancer-internal"

service.beta.kubernetes.io/aws-load-balancer-schema: "internal"

# service.beta.kubernetes.io/aws-load-balancer-cross-zone-load-balancing-enabled: "true"

# We want to enable prometheus metrics on the controller

metrics:

enabled: true

serviceMonitor:

enabled: true

# additionalLabels:

# prometheus: monitor

Route53

It is time to create the public and private hosted zone in Route53, as I have said before we will implement the split horizon dns. I registered my domain name from Namecheap and we will delegate the subdomain k8s.sreboy.com to Route53.

Why do we need to delegate the subdomain to Route53? Because we want to use the cert-manager to manage the Route53 hosted zone. This is done using the IAM Roles for Service Accounts (IRSA). Much more easily to be done on Route53 than on Namecheap.

Basically, the steps are:

- Create Public Hosted Zone in Route53.

- Create a

NSrecord in Namecheap to delegate the subdomain to Route53. - (Optionally) Test the delegation with a dummy record.

Resources Used:

- aws_route53_zone terraform Resource.

- Public Hosted Zone

- Private Hosted Zone

resource "aws_route53_zone" "public" {

name = "k8s.sreboy.com"

}

resource "aws_route53_record" "wildcard_cname" {

zone_id = aws_route53_zone.public.zone_id

name = "*"

type = "CNAME"

ttl = "300"

records = [

data.kubernetes_service.external_nginx_controller.status.0.load_balancer.0.ingress.0.hostname

]

}

resource "aws_route53_zone" "private" {

name = "k8s.sreboy.com"

vpc {

vpc_id = data.aws_eks_cluster.cluster.vpc_config.0.vpc_id

}

}

resource "aws_route53_record" "internal_wildcard_cname" {

zone_id = aws_route53_zone.private.zone_id

name = "*"

type = "CNAME"

ttl = "300"

records = [

data.kubernetes_service.internal_nginx_controller.status.0.load_balancer.0.ingress.0.hostname

]

}

Namecheap have a very retarded API. See docs. They require you to whitelist the IP address of the server you are calling their API from. You can NOT add a cider only a static IP address and you have only 10 IP addresses to whitelist. Along side with other hilarious decisions from their API design wise e.g. while adding or updating a record you can DELETE all your previous records if you forgot to set mode from OVERWRITE to MERGE and if you are calling the API raw you do have to include all your previous records in the call. It is a joke (a bad one).

So, instead of using terraform:

- namecheap_domain_records terraform resource.

resource "namecheap_domain_records" "delegate_to_route53" {

domain = "sreboy.com"

for_each = aws_route53_zone.k8s.name_servers

record {

hostname = "k8s"

type = "NS"

address = each.value

}

}

I will do it from the UI of Namecheap once the public hosted zone is created in Route53.

You can use shadowsocks if your ISP does not provide static IP address service like me. Then just white list the Elastic IP of this shadowsocks server. I learned that after I finished writing the article :)

Cert-Manager

Now it is time to install the cert-manager. We will use the cert-manager to manage and automate obtaining and renewing SSL certificates for our services.

It is the same part as the block called Cert-Manager Configuration in the graph above. But I will divide them into separate blocks for better understanding.

resource "aws_iam_openid_connect_provider" "eks_oidc" {

url = data.aws_eks_cluster.cluster.identity.0.oidc.0.issuer

client_id_list = ["sts.amazonaws.com"]

thumbprint_list = [data.tls_certificate.demo.certificates[0].sha1_fingerprint]

}

resource "aws_iam_policy" "cert_manager_route53_access" {

name = "CertManagerRoute53Access"

description = "Policy for cert-manager to manage Route53 hosted zone"

depends_on = [

aws_route53_zone.public,

aws_route53_zone.private,

]

policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "route53:GetChange",

"Resource": "arn:aws:route53:::change/*"

},

{

"Effect": "Allow",

"Action": [

"route53:ChangeResourceRecordSets",

"route53:ListResourceRecordSets"

],

"Resource": [

"arn:aws:route53:::hostedzone/${aws_route53_zone.public.zone_id}",

"arn:aws:route53:::hostedzone/${aws_route53_zone.private.zone_id}"

]

}

]

}

EOF

# [1]: The first Statement is to be able to get the current state

# of the request, to find out if dns record changes have been

# propagated to all route53 dns servers.

# [2]: The second statement one to update dns records such as txt

# for acme challange. We need to replace `<id>` with the hosted zone id.

}

resource "aws_iam_role" "cert_manager_acme" {

name = "cert-manager-acme"

assume_role_policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Federated": "arn:aws:iam::${data.aws_caller_identity.current.account_id}:oidc-provider/${replace(data.aws_eks_cluster.cluster.identity.0.oidc.0.issuer, "https://", "")}"

},

"Action": "sts:AssumeRoleWithWebIdentity",

"Condition": {

"StringEquals": {

"${replace(data.aws_eks_cluster.cluster.identity.0.oidc.0.issuer, "https://", "")}:sub": "system:serviceaccount:cert-manager:cert-manager"

}

}

}

]

}

EOF

}

resource "aws_iam_role_policy_attachment" "cert_manager_acme" {

role = aws_iam_role.cert_manager_acme.name

policy_arn = aws_iam_policy.cert_manager_route53_access.arn

}

resource "helm_release" "cert-manager" {

name = "cert-manager"

namespace = "cert-manager"

repository = "https://charts.jetstack.io"

chart = "cert-manager"

version = "1.14.4"

timeout = 300

atomic = true

create_namespace = true

depends_on = [

aws_iam_role_policy_attachment.cert_manager_acme,

]

values = [

<<YAML

installCRDs: true

# Helm chart will create the following CRDs:

# - Issuer

# - ClusterIssuer

# - Certificate

# - CertificateRequest

# - Order

# - Challenge

# Enable prometheus metrics, and create a service

# monitor object

prometheus:

# Ref: https://github.com/cert-manager/cert-manager/blob/master/deploy/charts/cert-manager/README.template.md#prometheusenabled--bool

enabled: true

servicemonitor:

enabled: true

# Incase we had more than one prometheus instance

# prometheusInstance: monitor

# DNS-01 Route53

serviceAccount:

annotations:

eks.amazonaws.com/role-arn: ${aws_iam_role.cert_manager_acme.arn}

extraArgs:

# You need to provide the following to be able to use the IAM role.

# If you are using cluster issuer you need to replace it with:

- --cluster-issuer-ambient-credentials

- --issuer-ambient-credentials

# - --enable-certificate-owner-ref=true

- --dns01-recursive-nameservers-only

- --dns01-recursive-nameservers=8.8.8.8:53,1.1.1.1:53

YAML

]

}

- Public Cluster Issuer

- Private Cluster Issuer

resource "kubectl_manifest" "cert_manager_cluster_issuer_public" {

depends_on = [

helm_release.cert-manager

]

yaml_body = yamlencode({

"apiVersion" = "cert-manager.io/v1"

"kind" = "ClusterIssuer"

"metadata" = {

"name" = "letsencrypt-dns01-production-cluster-issuer-public"

}

"spec" = {

"acme" = {

"server" = "https://acme-v02.api.letsencrypt.org/directory"

"email" = "ziadmansour.4.9.2000@gmail.com"

"privateKeySecretRef" = {

"name" = "letsencrypt-production-dns01-public-key-pair"

}

"solvers" = [

{

"dns01" = {

"route53" = {

"region" = "${var.region}"

"hostedZoneID" = "${aws_route53_zone.public.zone_id}"

}

}

}

]

}

}

})

}

resource "kubectl_manifest" "cert_manager_cluster_issuer_private" {

depends_on = [

helm_release.cert-manager

]

yaml_body = yamlencode({

"apiVersion" = "cert-manager.io/v1"

"kind" = "ClusterIssuer"

"metadata" = {

"name" = "letsencrypt-dns01-production-cluster-issuer-private"

}

"spec" = {

"acme" = {

"server" = "https://acme-v02.api.letsencrypt.org/directory"

"email" = "ziadmansour.4.9.2000@gmail.com"

"privateKeySecretRef" = {

"name" = "letsencrypt-production-dns01-private-key-pair"

}

"solvers" = [

{

"dns01" = {

"route53" = {

"region" = "${var.region}"

"hostedZoneID" = "${aws_route53_zone.private.zone_id}"

}

}

}

]

}

}

})

}

Test & Verify

- Verify that the dns delegation for the subdomain is working successfully.

- Use whatsmydns to check the DNS propagation. Enter

k8s.sreboy.comand see if theNSrecords are propagated. you should see the same output produced by outputns_recordsrunterraform output ns_recordsto see them again.

- Use whatsmydns to check the DNS propagation. Enter

- Verify that the wildcard CNAME record is created in Route53:

- Run

dig +short test.k8s.sreboy.comand see if it resolves to the external load balancer of the ingress-nginx controller. - Or any other subdomain it is a wildcard

dig +short <*>.k8s.sreboy.com.

- Run

- Before You move to the NEXT layer:

- Run:

cd terraformed-odyssey/kubernetes/system

# Create Secret

kubectl create secret generic argocd-notifications-secret -n argocd --from-literal slack-token=<slack-token> --dry-run=client -o yaml > secrets/argocd-notifications-secret.yaml

# Do NOT Forget to add annotations as in here:

# Ref: https://github.com/ZiadMansourM/terraformed-odyssey/blob/main/kubernetes/system/secrets/.gitkeep

# Then Seal the Secret

kubeseal --controller-name sealed-secrets --controller-namespace sealed-secrets --format yaml < secrets/argocd-notifications-secret-ignore.yaml > sealed-argocd-notifications-secret.yaml

15_Platform

In this layer, we will deploy:

- ArgoCD using app-of-apps pattern.

- AWS Client VPN using various authentication methods and over a proxy.

You can use kustomize or define yaml variables inside the yaml files. Do what you feel comfortable with. I will use the yaml files directly for simplicity.

Visualize Plan

It is an overly simplified graph. Just to help you visualize. And in which namespace objects exists e.g. where the dashboards are deployed ...etc.

- Cluster Issuer

- Namespaced Issuer

Cert-Manager Issuers

- Cluster Issuer

- Monitoring Issuer

- Goviolin Issuer

- Voting Issuer

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: letsencrypt-dns01-production-cluster-issuer

spec:

acme:

server: https://acme-v02.api.letsencrypt.org/directory

email: ziadmansour.4.9.2000@gmail.com

privateKeySecretRef:

name: letsencrypt-production-dns01-key-pair

solvers:

- dns01:

route53:

region: eu-central-1

hostedZoneID: Z10172763D2LB47VXDFP9

apiVersion: cert-manager.io/v1

kind: Issuer

metadata:

name: letsencrypt-dns01-production

namespace: monitoring

spec:

acme:

server: https://acme-v02.api.letsencrypt.org/directory

email: ziadmansour.4.9.2000@gmail.com

privateKeySecretRef:

name: letsencrypt-production-dns01-key-pair

solvers:

- dns01:

route53:

region: eu-central-1

hostedZoneID: Z10172763D2LB47VXDFP9

apiVersion: cert-manager.io/v1

kind: Issuer

metadata:

name: letsencrypt-dns01-production

namespace: goviolin

spec:

acme:

server: https://acme-v02.api.letsencrypt.org/directory

email: ziadmansour.4.9.2000@gmail.com

privateKeySecretRef:

name: letsencrypt-production-dns01-key-pair

solvers:

- dns01:

route53:

region: eu-central-1

hostedZoneID: Z10172763D2LB47VXDFP9

apiVersion: cert-manager.io/v1

kind: Issuer

metadata:

name: letsencrypt-dns01-production

namespace: voting

spec:

acme:

server: https://acme-v02.api.letsencrypt.org/directory

email: ziadmansour.4.9.2000@gmail.com

privateKeySecretRef:

name: letsencrypt-production-dns01-key-pair

solvers:

- dns01:

route53:

region: eu-central-1

hostedZoneID: Z10172763D2LB47VXDFP9

Monitoring Namespace Ingress

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: monitoring-ns-ingress

namespace: monitoring

annotations:

cert-manager.io/issuer: letsencrypt-dns01-production-cluster-issuer

spec:

ingressClassName: external-nginx

tls:

- hosts:

- grafana.k8s.sreboy.com

secretName: grafana-goviolin-k8s-sreboy-com-key-pair

- hosts:

- prometheus.k8s.sreboy.com

secretName: prometheus-goviolin-k8s-sreboy-com-key-pair

rules:

- host: grafana.k8s.sreboy.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: monitoring-grafana

port:

number: 80

- host: prometheus.k8s.sreboy.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: monitoring-kube-prometheus-prometheus

port:

number: 9090

Goviolin Namespace

apiVersion: v1

kind: Namespace

metadata:

name: goviolin

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: goviolin

namespace: goviolin

spec:

replicas: 3

selector:

matchLabels:

app: goviolin

template:

metadata:

labels:

app: goviolin

spec:

containers:

- name: goviolin

image: ziadmmh/goviolin:v0.0.1

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: goviolin

namespace: goviolin

spec:

selector:

app: goviolin

ports:

- protocol: TCP

port: 80

targetPort: 8080

type: ClusterIP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: goviolin

namespace: goviolin

annotations:

cert-manager.io/issuer: letsencrypt-dns01-production-cluster-issuer

spec:

ingressClassName: external-nginx

tls:

- hosts:

- goviolin.k8s.sreboy.com

secretName: goviolin-k8s-sreboy-com-key-pair

rules:

- host: goviolin.k8s.sreboy.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: goviolin

port:

number: 80

---

Custom Dashboards

We are using the Kube Prometheus Stack. It is like the defacto solution to deploy Prometheus and Grafana on kubernetes. It has default targets and dashboards already configured.

Also, it has a unique and an easy way to add custom dashboards:

- Visit Grafana Dashboards

- Choose the dashboards you like, say:

- For Cert Manger: id

20842AND download jsoncert-manager-20842.json. - For Ingress Nginx: id

14314AND download jsoningress-nginx-14314.json.

- For Cert Manger: id

- Run the following commands:

kubectl create configmap cert-manager-dashboard-20842 --from-file=$PWD/dashboards/cert-manager-20842.json --dry-run=client -o yaml > cert-manager-dashboard-20842.yaml

kubectl create configmap ingress-nginx-dashboard-14314 --from-file=$PWD/dashboards/ingress-nginx-14314.json --dry-run=client -o yaml > ingress-nginx-dashboard-14314.yaml

We are NOT finished yet:

ziadh@Ziads-MacBook-Air files % tree

.

├── cert-manager-dashboard-20842.yaml

├── dashboards

│ ├── cert-manager-20842.json

│ └── ingress-nginx-14314.json

├── goviolin.yaml

├── ingress-nginx-dashboard-14314.yaml

├── issuers.yaml

└── monitoring.yaml

1 directory, 7 files

Now you need to vi cert-manager-dashboard-20842.yaml and vi ingress-nginx-dashboard-14314.yaml and add the following lines:

labels:

grafana_dashboard: "1"

This is how grafana discovers the dashboards and registers them.

cert-manager-dashboard-20842.yaml

apiVersion: v1

data:

cert-manager-20842.json: |-

{

"annotations": {

"list": [

{

"builtIn": 1,

"datasource": {

"type": "grafana",

"uid": "-- Grafana --"

},

"enable": true,

"hide": true,

"iconColor": "rgba(0, 211, 255, 1)",

"name": "Annotations & Alerts",

"type": "dashboard"

}

]

},

"description": "The dashboard gives an overview of the SSL certs managed by cert-manager in Kubernetes",

"editable": true,

"fiscalYearStartMonth": 0,

"gnetId": 20842,

"graphTooltip": 0,

"links": [],

"panels": [

{

"datasource": {

"type": "prometheus",

"uid": "${datasource}"

},

"description": "The number if available certificates",

"fieldConfig": {

"defaults": {

"color": {

"mode": "thresholds"

},

"mappings": [],

"noValue": "0",

"thresholds": {

"mode": "absolute",

"steps": [

{

"color": "green",

"value": null

}

]

}

},

"overrides": []

},

"gridPos": {

"h": 8,

"w": 8,

"x": 0,

"y": 0

},

"id": 1,

"options": {

"colorMode": "value",

"graphMode": "none",

"justifyMode": "auto",

"orientation": "auto",

"reduceOptions": {

"calcs": [

"lastNotNull"

],

"fields": "",

"values": false

},

"showPercentChange": false,

"textMode": "value",

"wideLayout": true

},

"pluginVersion": "10.4.0",

"targets": [

{

"datasource": {

"type": "prometheus",

"uid": "${datasource}"

},

"disableTextWrap": false,